Cache Mapping-

Before you go through this article, make sure that you have gone through the previous article on Cache Mapping.

| Cache mapping is a technique by which the contents of main memory are brought into the cache memory. |

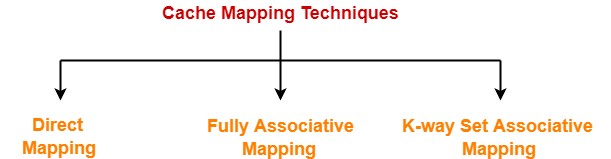

Different cache mapping techniques are-

- Direct Mapping

- Fully Associative Mapping

- K-way Set Associative Mapping

In this article, we will discuss about direct mapping in detail.

Direct Mapping-

In direct mapping,

- A particular block of main memory can map to only one particular line of the cache.

- The line number of cache to which a particular block can map is given by-

|

Cache line number

= ( Main Memory Block Address ) Modulo (Number of lines in Cache) |

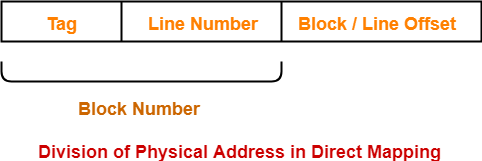

Division of Physical Address-

In direct mapping, the physical address is divided as-

Direct Mapped Cache-

| Direct mapped cache employs direct cache mapping technique. |

The following steps explain the working of direct mapped cache-

After CPU generates a memory request,

- The line number field of the address is used to access the particular line of the cache.

- The tag field of the CPU address is then compared with the tag of the line.

- If the two tags match, a cache hit occurs and the desired word is found in the cache.

- If the two tags do not match, a cache miss occurs.

- In case of a cache miss, the required word has to be brought from the main memory.

- It is then stored in the cache together with the new tag replacing the previous one.

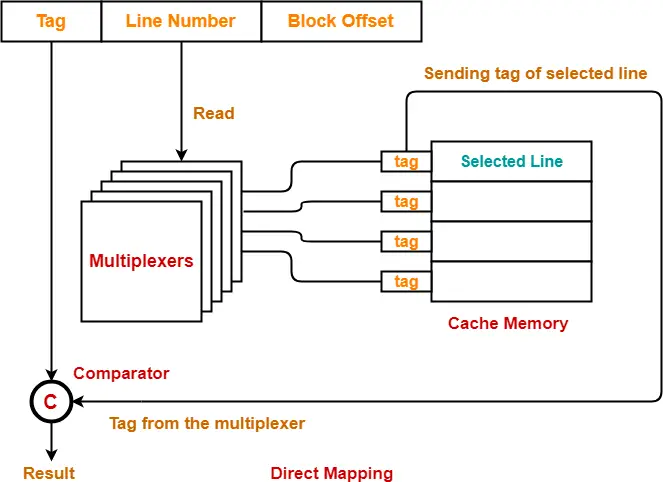

Implementation-

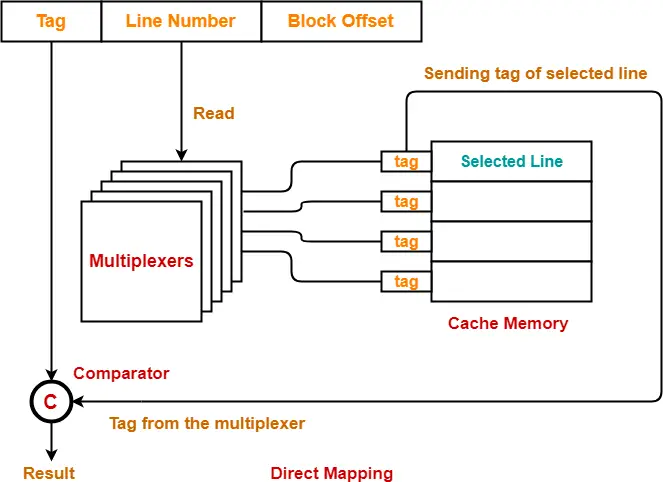

The following diagram shows the implementation of direct mapped cache-

(For simplicity, this diagram shows does not show all the lines of multiplexers)

The steps involved are as follows-

Step-01:

- Each multiplexer reads the line number from the generated physical address using its select lines in parallel.

- To read the line number of L bits, number of select lines each multiplexer must have = L.

Step-02:

- After reading the line number, each multiplexer goes to the corresponding line in the cache memory using its input lines in parallel.

- Number of input lines each multiplexer must have = Number of lines in the cache memory

Step-03:

- Each multiplexer outputs the tag bit it has selected from that line to the comparator using its output line.

- Number of output line in each multiplexer = 1.

UNDERSTAND

It is important to understand-

Number of multiplexers required = Number of bits in the tag

Example-

So,

|

Step-04:

- Comparator compares the tag coming from the multiplexers with the tag of the generated address.

- Only one comparator is required for the comparison where-

Size of comparator = Number of bits in the tag

- If the two tags match, a cache hit occurs otherwise a cache miss occurs.

Hit latency-

The time taken to find out whether the required word is present in the Cache Memory or not is called as hit latency.

For direct mapped cache,

| Hit latency = Multiplexer latency + Comparator latency |

Also Read- Set Associative Cache | Implementation & Formulas

Important Results-

Following are the few important results for direct mapped cache-

- Block j of main memory can map to line number (j mod number of lines in cache) only of the cache.

- Number of multiplexers required = Number of bits in the tag

- Size of each multiplexer = Number of lines in cache x 1

- Number of comparators required = 1

- Size of comparator = Number of bits in the tag

- Hit latency = Multiplexer latency + Comparator latency

To gain better understanding about direct mapping,

Next Article- Practice Problems On Direct Mapping

Get more notes and other study material of Computer Organization and Architecture.

Watch video lectures by visiting our YouTube channel LearnVidFun.