Process Synchronization-

Before you go through this article, make sure that you have gone through the previous articles on Process Synchronization.

We have discussed the following synchronization mechanisms-

In this article, we will discuss practice problems based on synchronization mechanisms.

PRACTICE PROBLEMS BASED ON SYNCHRONIZATION MECHANISMS-

Problem-01:

The enter_CS( ) and leave_CS( ) functions to implement critical section of a process are realized using test and set instruction as follows-

void enter_CS(X)

{

while (test-and-set(X));

}

void leave_CS(X)

{

X = 0;

}

In the above solution, X is a memory location associated with the CS and is initialized to 0. Now, consider the following statements-

- The above solution to CS problem is deadlock-free

- The solution is starvation free

- The processes enter CS in FIFO order

- More than one process can enter CS at the same time.

Which of the above statements is true?

- I only

- I and II

- II and III

- IV only

Solution-

Clearly, the given mechanism is test and set lock which has the following characteristics-

- It ensures mutual exclusion.

- It ensures freedom from deadlock.

- It may cause the process to starve for the CPU.

- It does not guarantee that processes will always execute in a FIFO order otherwise there would be no starvation.

Thus, Option (A) is correct.

Problem-02:

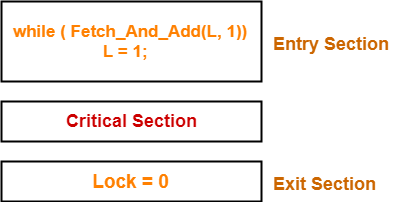

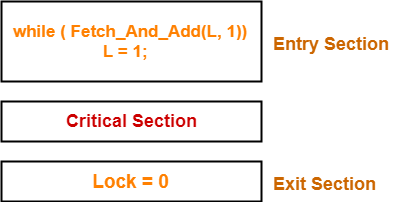

Fetch_And_Add(X,i) is an atomic Read-Modify-Write instruction that reads the value of memory location X, increments it by the value i and, returns the old value of X. It is used in the pseudo code shown below to implement a busy-wait lock. L is an unsigned integer shared variable initialized to 0. The value of 0 corresponds to lock being available, while any non-zero value corresponds to the lock being not available.

AcquireLock(L)

{

while (Fetch_And_Add(L,1))

L = 1;

}

ReleaseLock(L)

{

L = 0;

}

This implementation-

- fails as L can overflow

- fails as L can take on a non-zero value when the lock is actually available

- works correctly but may starve some processes

- works correctly without starvation

Solution-

The given synchronization mechanism has been implemented as-

Working-

This synchronization mechanism works as explained in the following scenes-

Scene-01:

- A process P1 arrives.

- It executes the Fetch_And_Add(L, 1) instruction.

- Since lock value is set to 0, so it returns value 0 to the while loop and sets the lock value to 0+1=1.

- The returned value 0 breaks the while loop condition.

- Process P1 enters the critical section and executes.

Scene-02:

- Another process P2 arrives.

- It executes the Fetch_And_Add(L, 1) instruction.

- Since lock value is now set to 1, so it returns value 1 to the while loop and sets the lock value to 1+1=2.

- The returned value 1 does not break the while loop condition.

- Process P2 executes the next instruction L=1 and sets the lock value to 1 and again checks the condition.

- The lock value keeps changing from 1 to 2 and then 2 to 1.

- The process P2 is trapped inside an infinite while loop.

- The while loop keeps the process P2 busy until the lock value becomes 0 and its condition breaks.

Scene-03:

- Process P1 comes out of the critical section and sets the lock value to 0.

- The while loop condition breaks.

- Now, process P2 waiting for the critical section enters the critical section.

Failure of the Mechanism-

- The mechanism fails to provide the synchronization among the processes.

- This is explained below-

Explanation-

The occurrence of following scenes may lead to two processes present inside the critical section-

Scene-01:

- A process P1 arrives.

- It executes the Fetch_And_Add(L, 1) instruction.

- Since lock value is set to 0, so it returns value 0 to the while loop and sets the lock value to 0+1=1.

- The returned value 0 breaks the while loop condition.

- Process P1 enters the critical section and executes.

Scene-02:

- Another process P2 arrives.

- It executes the Fetch_And_Add(L, 1) instruction.

- Since lock value is now set to 1, so it returns value 1 to the while loop and sets the lock value to 1+1=2.

- The returned value 1 does not break the while loop condition.

- Now, as process P2 is about to enter the body of while loop, it gets preempted.

Scene-03:

- Process P1 comes out of the critical section and sets the lock value to 0.

Scene-04:

- Process P2 gets scheduled again.

- It resumes its execution.

- Before preemption, it had already satisfied the while loop condition.

- Now, it begins execution from next instruction.

- It sets the lock value to 1 and here the blunder happens.

- This is because the lock is actually available and lock value = 0 but P2 itself sets the lock value to 1.

- Then, it checks the condition and now there is no one who can set the lock value to zero.

- Thus, Process P2 gets trapped inside the infinite while loop forever.

- All the future processes too will be trapped inside the infinite while loop forever.

Thus,

- Although mutual exclusion could be guaranteed but still the mechanism fails.

- This is because lock value got set to a non-zero value even when the lock was available.

Also,

| This synchronization mechanism leads to overflow of value ‘L’. |

Explanation-

- When every process gets preempt after executing the while loop condition, the value of lock will keep increasing with every process.

- When first process arrives, it returns the value 0 and sets the lock value to 0+1=1 and gets preempted.

- When second process arrives, it returns the value 1 and sets the lock value to 1+1=2 and gets preempted.

- When third process arrives, it returns the value 2 and sets the lock value to 2+1=3 and gets preempted.

- Thus, for very large number of processes preempting in the above manner, L will overflow.

Also,

| This synchronization mechanism does not guarantee bounded waiting and may cause starvation. |

Explanation-

- There might exist an unlucky process which when arrives to execute the critical section finds it busy.

- So, it keeps waiting in the while loop and eventually gets preempted.

- When it gets rescheduled and comes to execute the critical section, it finds another process executing the critical section.

- So, again, it keeps waiting in the while loop and eventually gets preempted.

- This may happen several times which causes that unlucky process to starve for the CPU.

Thus, Options (A) and (B) are correct.

Problem-03:

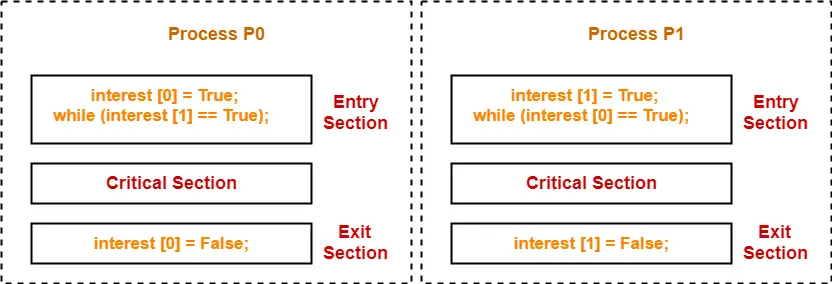

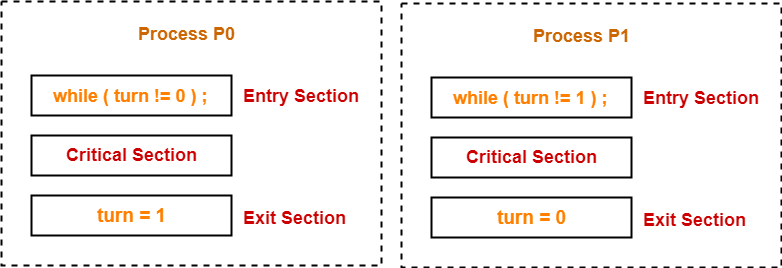

Consider the methods used by processes P1 and P2 for accessing their critical sections whenever needed, as given below. The initial values of shared Boolean variables S1 and S2 are randomly assigned.

| Method used by P1 |

Method used by P2 |

| while (S1 == S2);

Critical Section

S1 = S2 |

while (S1 != S2);

Critical Section

S2 = !S1 |

Which one of the following statements describes the properties achieved?

- Mutual exclusion but not progress

- Progress but not mutual exclusion

- Neither mutual exclusion nor progress

- Both mutual exclusion and progress

Solution-

The initial values of shared Boolean variables S1 and S2 are randomly assigned. The assigned values may be-

- S1 = 0 and S2 = 0

- S1 = 0 and S2 = 1

- S1 = 1 and S2 = 0

- S1 = 1 and S2 = 1

Case-01: If S1 = 0 and S2 = 0-

In this case,

- Process P1 will be trapped inside an infinite while loop.

- However, process P2 gets the chance to execute.

- Process P2 breaks the while loop condition, executes the critical section and then sets S2 = 1.

- Now, S1 = 0 and S2 = 1.

- Now, process P2 can not enter the critical section again but process P1 can enter the critical section.

- Process P1 breaks the while loop condition, executes the critical section and then sets S1 = 1.

- Now, S1 = 1 and S2 = 1.

- Now, process P1 can not enter the critical section again but process P2 can enter the critical section.

Thus,

- Processes P1 and P2 executes the critical section alternately starting with process P2.

- Mutual exclusion is guaranteed.

- Progress is not guaranteed because if one process does not execute, then other process would never be able to execute again.

- Processes have to necessarily execute the critical section in strict alteration.

Case-02: If S1 = 0 and S2 = 1-

In this case,

- Process P2 will be trapped inside an infinite while loop.

- However, process P1 gets the chance to execute.

- Process P1 breaks the while loop condition, executes the critical section and then sets S1 = 1.

- Now, S1 = 1 and S2 = 1.

- Now, process P1 can not enter the critical section again but process P2 can enter the critical section.

- Process P2 breaks the while loop condition, executes the critical section and then sets S2 = 0.

- Now, S1 = 1 and S2 = 0.

- Now, process P2 can not enter the critical section again but process P1 can enter the critical section.

Thus,

- Processes P1 and P2 executes the critical section alternately starting with process P1.

- Mutual exclusion is guaranteed.

- Progress is not guaranteed because if one process does not execute, then other process would never be able to execute again.

- Processes have to necessarily execute the critical section in strict alteration.

Case-03: If S1 = 1 and S2 = 0-

- This case is same as case-02.

Case-04: If S1 = 1 and S2 = 1-

- This case is same as case-01.

Thus, Overall we can conclude-

- Processes P1 and P2 executes the critical section alternatively.

- Mutual exclusion is guaranteed.

- Progress is not guaranteed because if one process does not execute, then other process would never be able to execute again.

- Processes have to necessarily execute the critical section in strict alteration.

Thus, Option (A) is correct.

To watch video solutions and practice other problems,

Watch this Video Lecture

Next Article- Introduction to Semaphores

Get more notes and other study material of Operating System.

Watch video lectures by visiting our YouTube channel LearnVidFun.